Publications

My research aims to develop models that understand temporal dynamics like humans. I design neural networks that recognize objects, actions, and interactions in videos, training them to understand how these elements evolve over time—often with language supervision.

You can also find my papers on my Google Scholar profile.

Selected Publications

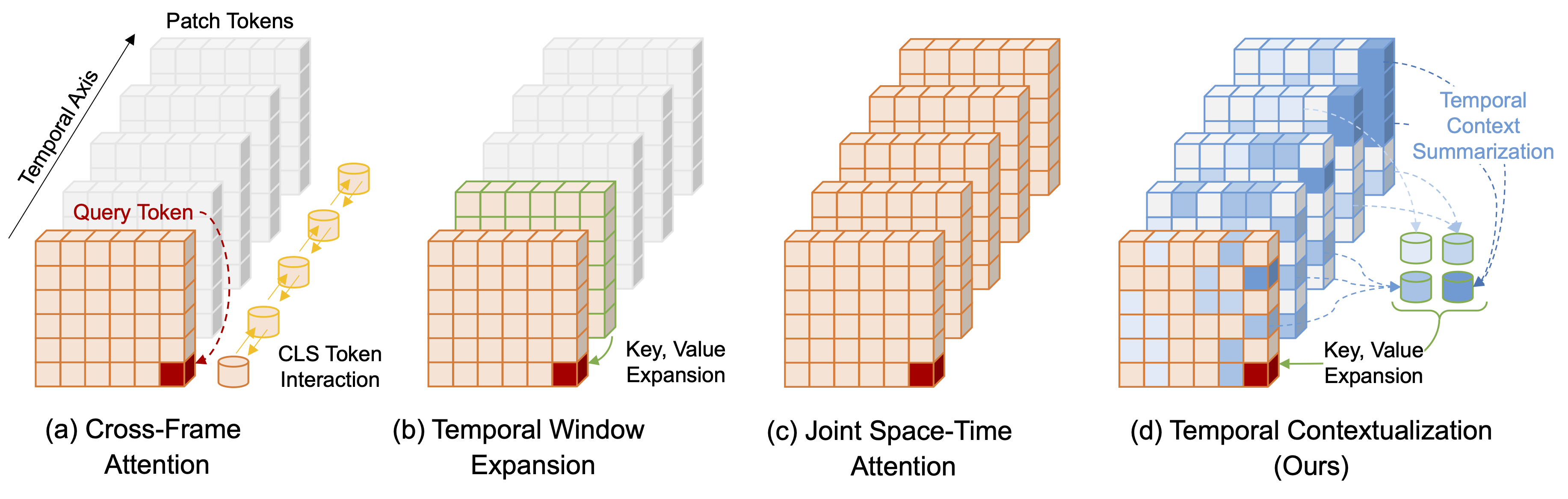

Leveraging Temporal Contextualization for Video Action Recognition

Minji Kim, Dongyoon Han, Taekyung Kim, Bohyung Han

ECCV 2024

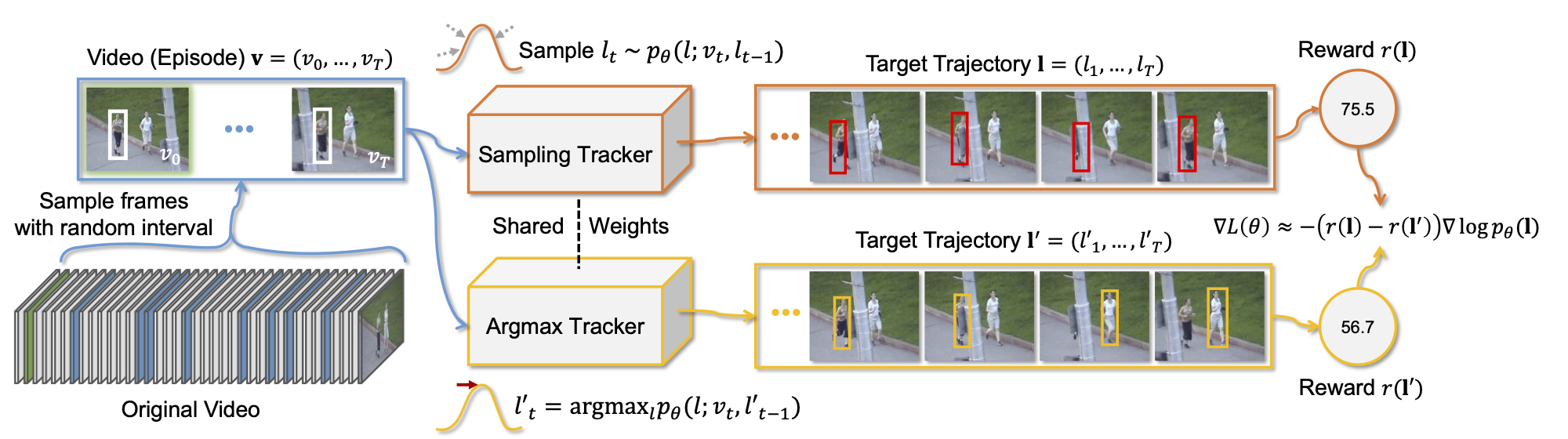

Towards Sequence-Level Training for Visual Tracking

Minji Kim*, Seungkwan Lee*, Jungseul Ok, Bohyung Han, Minsu Cho (*Equal Contribution)

ECCV 2022

Online Hybrid Lightweight Representations Learning: Its Application to Visual Tracking

Ilchae Jung, Minji Kim, Eunhyeok Park, Bohyung Han

IJCAI 2022

![]()

Other Projects

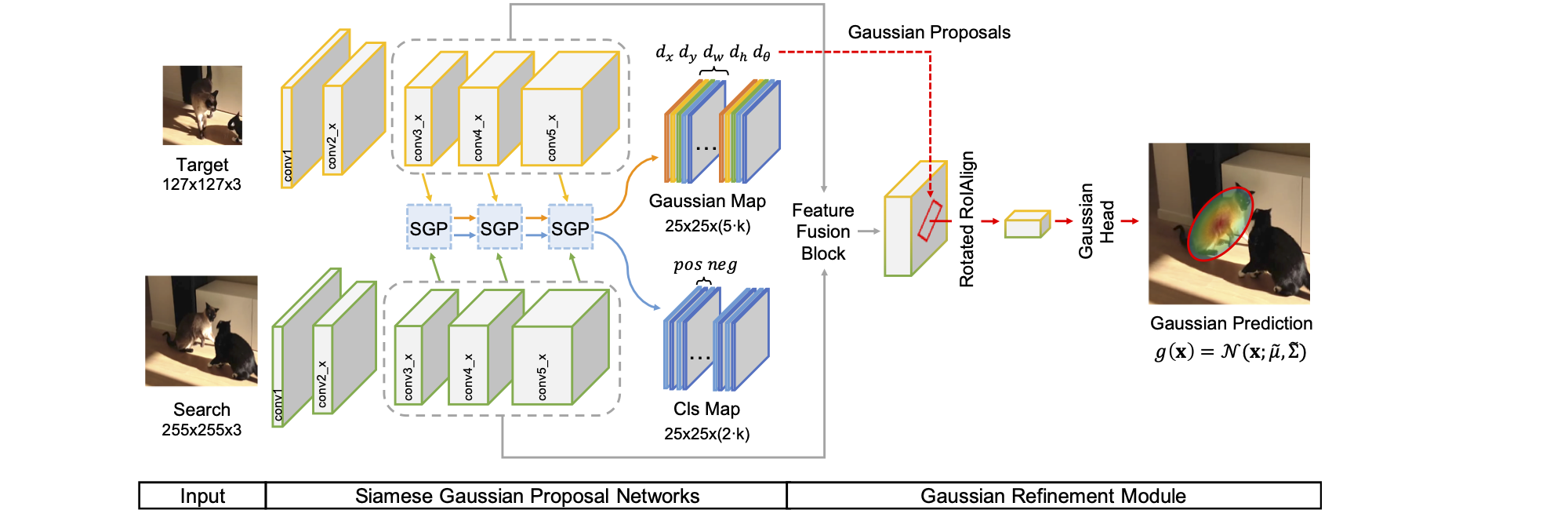

Learning Gaussian Models for Orientation-Aware Visual Tracking

Minji Kim, Bohyung Han

US Patent (App. 18/370,531)

Spatio-Temporal Modeling via Adaptive Frequency Filtering for Video Action Recognition

Minji Kim, Taehoon Kim, Jonghyeon Seon, Bohyung Han

Journal of Korean Institute of Information Scientists and Engineers (KIISE), 2024

Ensemble Modeling with Convolutional Neural Networks for Application in Visual Object Tracking

Minji Kim, Ilchae Jung, Bohyung Han

Journal of Korean Institute of Information Scientists and Engineers (KIISE), 2021

Top-down Thermal Tracking Based on Rotatable Elliptical Motion Model for Intelligent Livestock Breeding

Minji Kim, Wonjun Kim

Multimedia Systems, Springer, 2020